Regular readers (if there are such things to this blog) may have noticed that I’ve recently been writing a lot about two main cloud providers. I won’t link to all the articles, but if you’re interested, a quick search for either Azure or Google Cloud Platform will yield several results.

Since it’s Christmas, I thought I’d do something a bit different and try to combine them. This isn’t completely frivolous; both have advantages and disadvantages: GCP is very geared towards big data, whereas the Azure Service Fabric provides a lot of functionality that might fit well with a much smaller LOB app.

So, what if we had the following scenario:

Santa has to deliver presents to every child in the world in one night. Santa is only one man* and Google tells me there are 1.9B children in the world, so he contracts out a series of delivery drivers. There needs to be around 79M deliveries every hour, let’s assume that each delivery driver can work 24 hours**. Each driver can deliver, say 100 deliveries per hour, that means we need around 790,000 drivers. Every delivery driver has an app that links to their depot; recording deliveries, schedules, etc.

That would be a good app to write in, say, Xamarin, and maybe have an Azure service running it; here’s the obligatory box diagram:

The service might talk to the service bus, might control stock, send e-mails, all kinds of LOB jobs. Now, I’m not saying for a second that Azure can’t cope with this, but what if we suddenly want all of these instances to feed metrics into a single data store. There’s 190*** countries in the world; if each has a depot, then there’s ~416K messages / hour going into each Azure service. But there’s 79M / hour going into a single DB. Because it’s Christmas, let assume that Azure can’t cope with this, or let’s say that GCP is a little cheaper at this scale; or that we have some Hadoop jobs that we’d like to use on the data. In theory, we can link these systems; which might look something like this:

So, we have multiple instances of the Azure architecture, and they all feed into a single GCP service.

Disclaimer

At no point during this post will I attempt to publish 79M records / hour to GCP BigQuery. Neither will any Xamarin code be written or demonstrated - you have to use your imagination for that bit.

Proof of Concept

Given the disclaimer I’ve just made, calling this a proof of concept seems a little disingenuous; but let’s imagine that we know that the volumes aren’t a problem and concentrate on how to link these together.

Azure Service

Let’s start with the Azure Service. We’ll create an Azure function that accepts a HTTP message, updates a DB and then posts a message to Google PubSub.

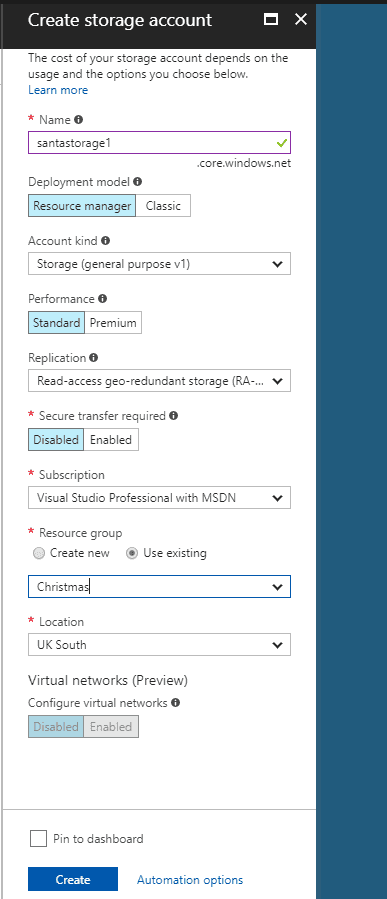

Storage

For the purpose of this post, let’s store our individual instance data in Azure Table Storage. I might come back at a later date and work out how and whether it would make sense to use CosmosDB instead.

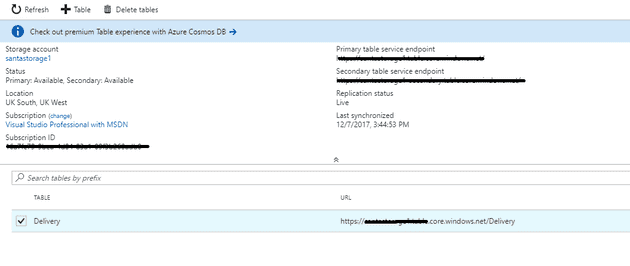

We’ll set-up a new table called Delivery:

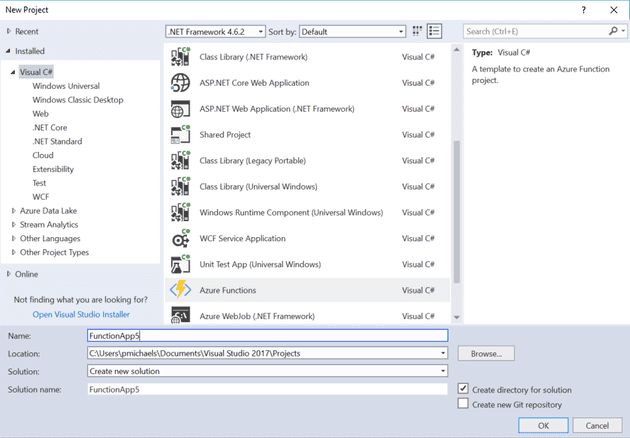

Azure Function

Now we have somewhere to store the data, let’s create an Azure Function App that updates it. In this example, we’ll create a new Function App from VS:

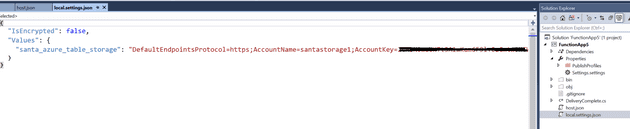

In order to test this locally, change local.settings.json to point to your storage location described above.

And here’s the code to update the table:

public static class DeliveryComplete

{

[FunctionName("DeliveryComplete")]

public static HttpResponseMessage Run(

[HttpTrigger(AuthorizationLevel.Function, "post", Route = null)]HttpRequestMessage req,

TraceWriter log,

[Table("Delivery", Connection = "santa\_azure\_table\_storage")] ICollector<TableItem> outputTable)

{

log.Info("C# HTTP trigger function processed a request.");

// parse query parameter

string childName = req.GetQueryNameValuePairs()

.FirstOrDefault(q => string.Compare(q.Key, "childName", true) == 0)

.Value;

string present = req.GetQueryNameValuePairs()

.FirstOrDefault(q => string.Compare(q.Key, "present", true) == 0)

.Value;

var item = new TableItem()

{

childName = childName,

present = present,

RowKey = childName,

PartitionKey = childName.First().ToString()

};

outputTable.Add(item);

return req.CreateResponse(HttpStatusCode.OK);

}

public class TableItem : TableEntity

{

public string childName { get; set; }

public string present { get; set; }

}

}

Testing

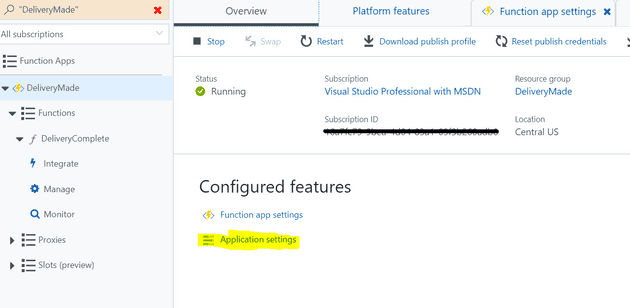

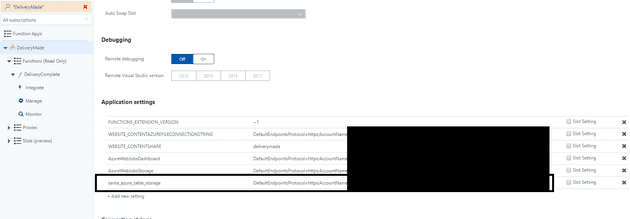

There are two ways to test this; the first is to just press F5; that will launch the function as a local service, and you can use PostMan or similar to test it; the alternative is to deploy to the cloud. If you choose the latter, then your local.settings.json will not come with you, so you’ll need to add an app setting:

Remember to save this setting, otherwise, you’ll get an error saying that it can’t find your setting, and you won’t be able to work out why - ask me how I know!

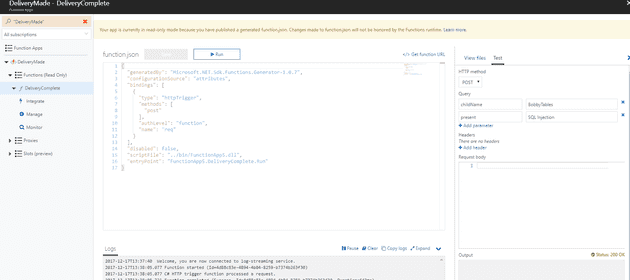

Now, if you run a test …

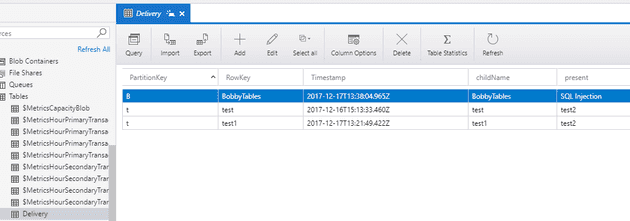

You should be able to see your table updated (shown here using Storage Explorer):

Summary

We now have a working Azure function that updates a storage table with some basic information. In the next post, we’ll create a GCP service that pipes all this information into BigTable and then link the two systems.

Footnotes

* Remember, all the guys in Santa suits are just helpers. ** That brandy you leave out really hits the spot! *** I just Googled this - it seems a bit low to me, too.

References

https://anthonychu.ca/post/azure-functions-update-delete-table-storage/