In this post, I described how we might attempt to help Santa and his delivery drivers to deliver presents to every child in the world, using the combined power of Google and Microsoft.

In this, the second part of the series (there will be one more), I’m going to describe how we might set-up a GCP pipeline that feeds that data into BigQuery (Google’s BigData NoSQL warehouse offering). We’ll first set up BigQuery, then the PubSub topic, and finally, we’ll set-up the dataflow, ready for Part 3, which will be joining the two systems together.

BigQuery

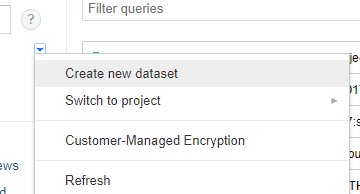

Once you navigate to the BigQuery section of the GCP console, you’ll be able to create a Dataset:

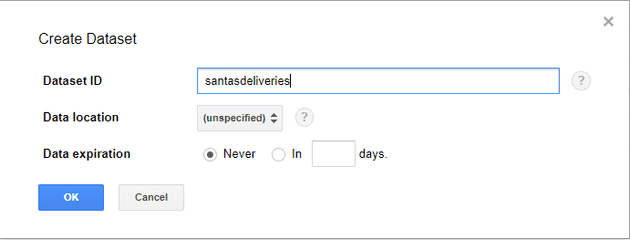

You can now set-up a new table. As this is an illustration, we’ll keep it as simple as possible, but you can see that this might be much more complex:

One thing to bear in mind about BigQuery, and cloud data storage in general is that, often, it makes sense to de-normalise your data - storage is often much cheaper than CPU time.

PubSub

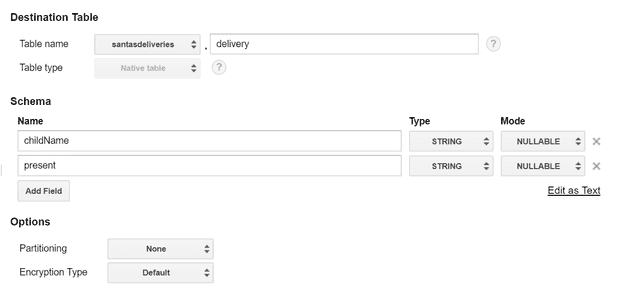

Now we have somewhere to put the data; we could simply have the Azure function write the data into BigQuery. However, we might then run into problems if the data flow suddenly spiked. For this reason, Google recommends the use of PubSub as a shock absorber.

Let’s create a PubSub topic. I’ve written in more detail on this here:

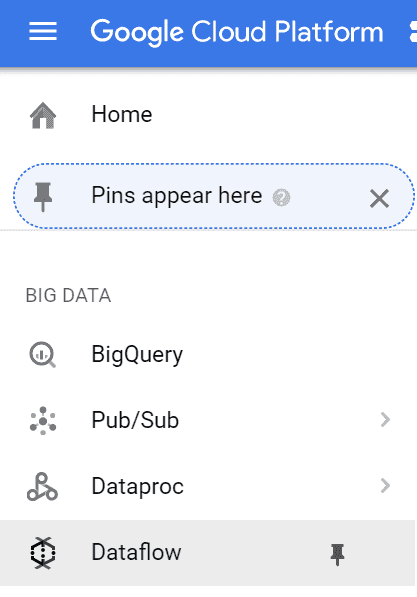

DataFlow

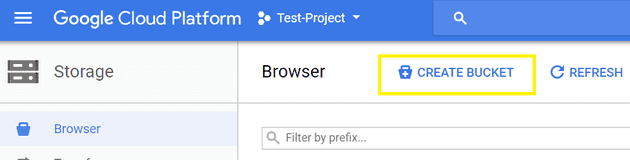

The last piece of the jigsaw is Dataflow. Dataflow can be used for much more complex tasks than to simply take data from one place and put it in another, but in this case, that’s all we need. Before we can set-up a new dataflow job, we’ll need to create a storage bucket:

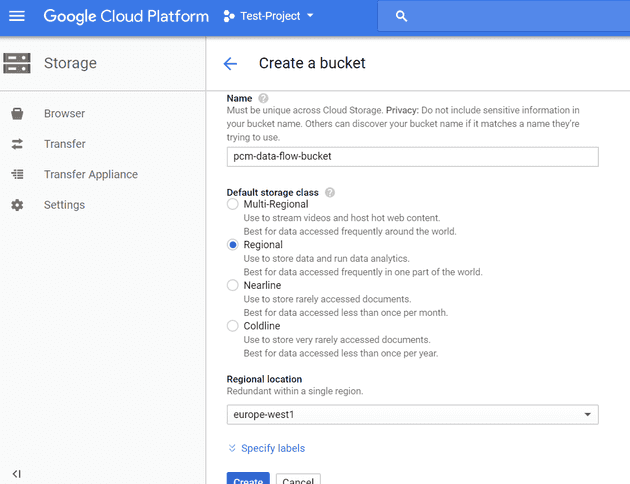

We’ll create the bucket as Regional for now:

Remember that the bucket name must be unique (so no-one can ever pick pcm-data-flow-bucket again!)

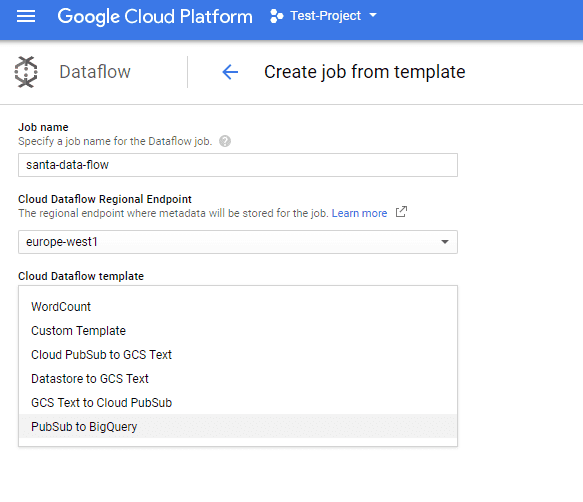

Now, we’ll move onto the DataFlow itself. We get a number of dataflow templates out of the box; and we’ll use one of those. Let’s launch dataflow from the console:

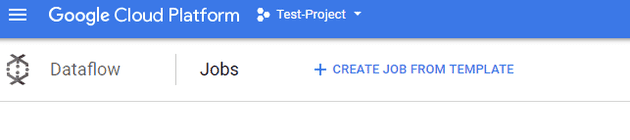

Here we create a new Dataflow job:

We’ll pick “PubSub to BigQuery”:

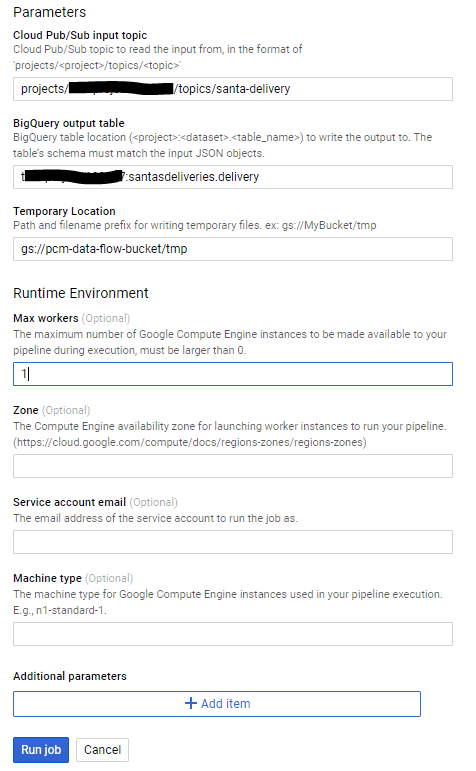

You’ll then get asked for the name of the topic (which was created earlier) and the storage bucket (again, created earlier); you’re form should look broadly like this when you’re done:

I strongly recommend specifying a maximum number of workers, at least while you’re testing.

Testing

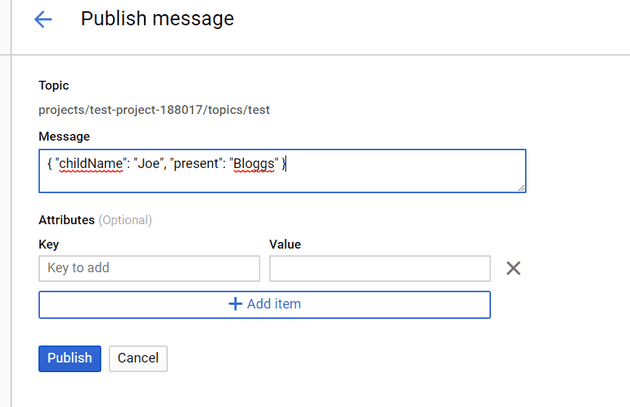

Finally, we’ll test it. PubSub allows you to publish a message:

Next, visit the Dataflow to see what’s happening:

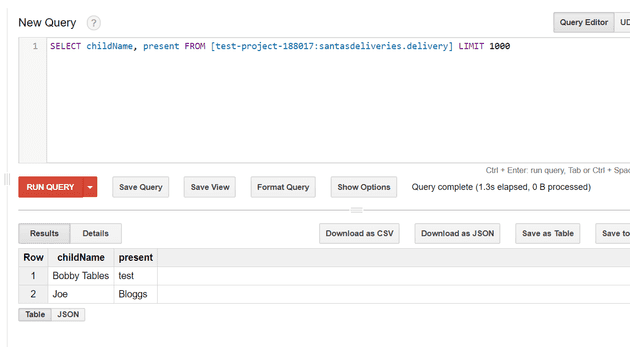

Looks interesting! Finally, in BigQuery, we can see the data:

Summary

We now have the two separate cloud systems functioning independently. Step three will be to join them together.