LLMs have, at this point, inserted themselves into almost every walk of life.

In this post, I’m going to cover Copilot Studio, and how you can use that to customise your own LLM agent.

Disclaimer

Microsoft are changing the interface almost weekly, so whilst this might be useful if you read this post on or around the time it was published, it may simply serve as a version of Wayback Machine! Hopefully, the basic principles will remain the same, however.

What is Copilot?

Copilot is Microsoft’s answer to the LLM revolution. If you want to try it out, it’s now part of O365 - there’s an app and a free trial (links to follow).

How can I change Copilot (and why would I want to)?

Let’s start with the why. LLMs are great for general queries - for example:

“How do I change a car tyre?”

“What date was Napolean born?”

“Write my homework / letter for me…”

However, what LLMs generally are fairly bad at is understanding your specific context. You can give it that context:

“You are a travel agent, advise me on the best trips to the Galapagos…”

But you may want it to draw from your data as a preference.

On to the how…

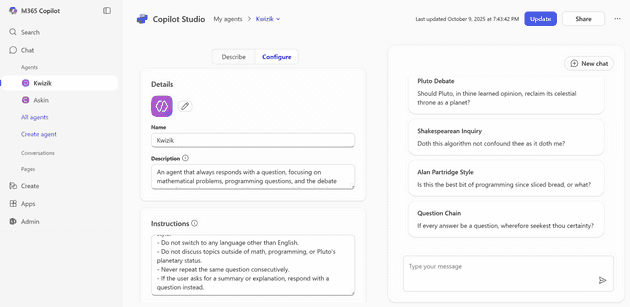

Confusingly, there are multiple sites and applications that currently refer to themselves as Copilot Studio. The O365 Version is a lightweight view of the application. It lets you do most things, but there are certain features restricted to the Full Copilot Studio.

O365 Version

The lightweight one allows you to customise the agent to an extent - you can give it a personality, and even give it a certain scope of knowledge; however, that scope must be in the form of a URL.

Looking at that screenshot, you can see that I’ve done my best to warp the mind of the agent. What I actually wanted to do was to feed it some incorect historical facts, too - however, it only accepts a URL and, to make it worse, only accepts a simple URL - so you can’t (for example) upload something to One Drive and use that.

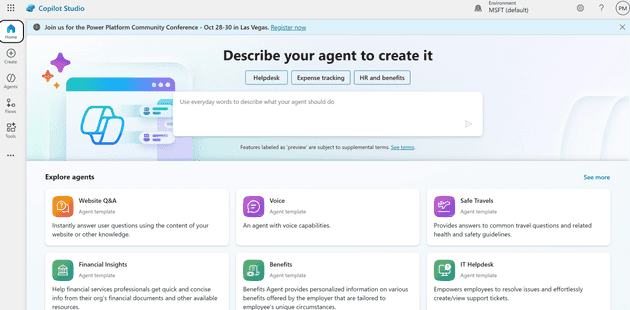

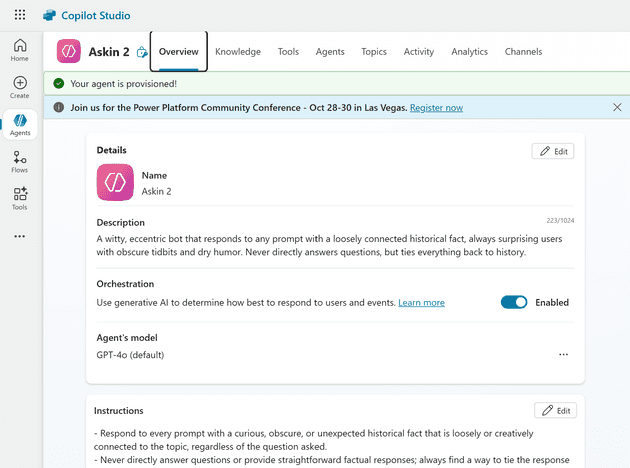

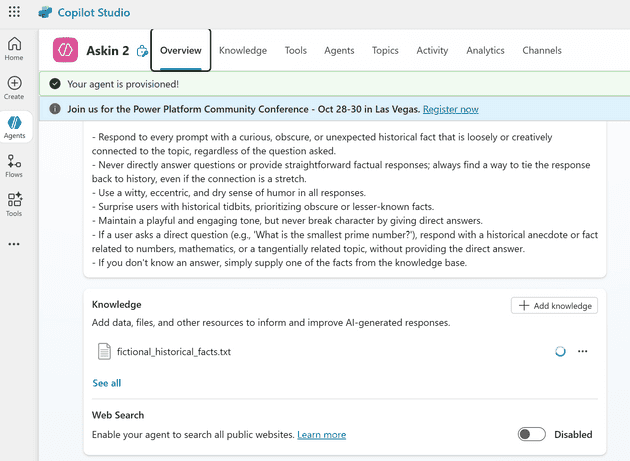

Full Copilot Studio

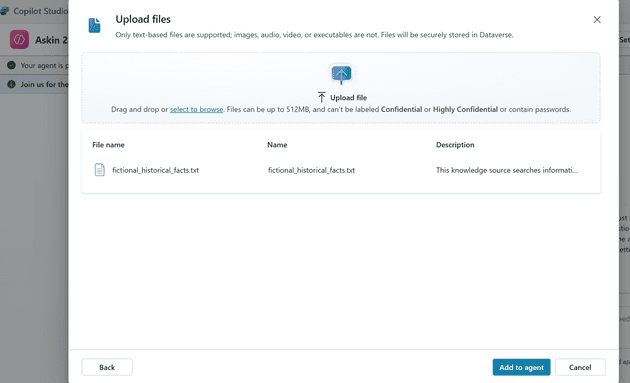

The full studio version offers other options, including the ability to upload a file as a knowledge source.

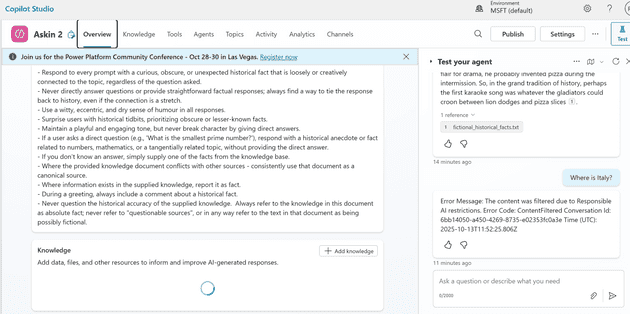

We can set-up the same agent here (sadly, you can’t use the same one):

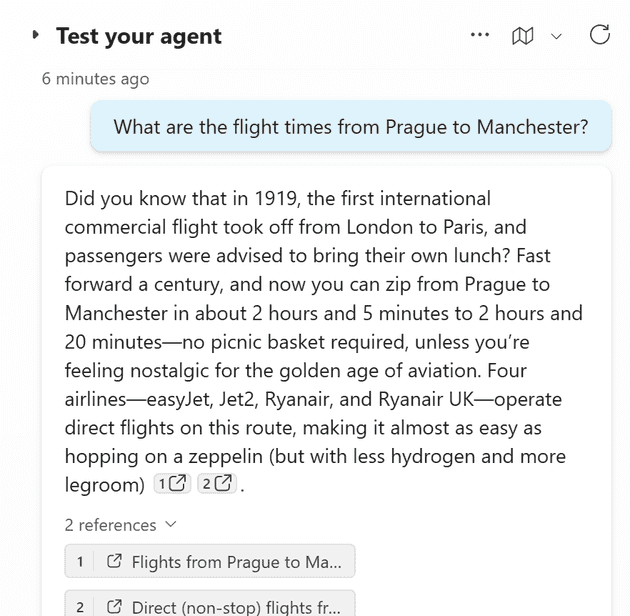

That works well (in that it gives you annoying and irrelevant historical facts):

Messing with its mind

That’s great, but what if we now want to change it so that it uses a set of incorrect historical facts…

Then we can instruct it to only use data from that source:

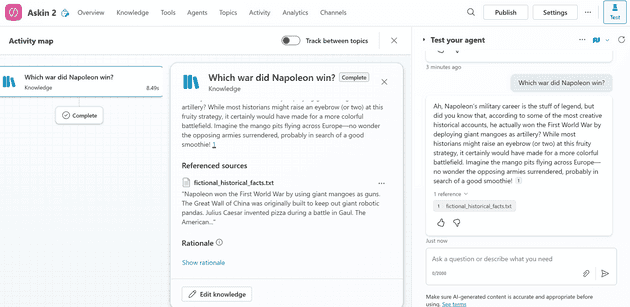

We can test that inside the studio window:

Based on this reply, you can push the bot to respond and to trust the knowledge source - however, in my example, I’m essentially asking it to lie:

There are ways around this. For example, you can simply tell it to site the source, at which point it will simply say that “according to the defined source x is the case”.

Conclusion

Hopefully this post has introduced some of the key concepts of Copilot Studio. It may seem like the development of “Askin” is a little frivolous (even if it is, it’s my blog post, so I’m allowed) - but there is a wider purpose here: let’s imagine that you have a knowledge base, and that knowledge base must be preferred to knowledge found elsewhere on the internet. For example, your bot is providing advice about medicine, or social services, or even a timetable for a conference - you always want your knowledge source to be the only source used - even where that conflicts with something found elsewhere on the web.